Demos on Gazebo

Before running the demos it is necessary to get acquinted with the setup section of the documentation. Make sure you check: Using only the simulated robot

Launching the Simulation

To launch the simulator:

roslaunch robotont_gazebo gazebo.launch

The launch file has three arguments:

model - chooses between a model with NUC and realsense and a model without them

default: robotont_gazebo_nuc

options: robotont_gazebo_nuc, robotont_gazebo_basic

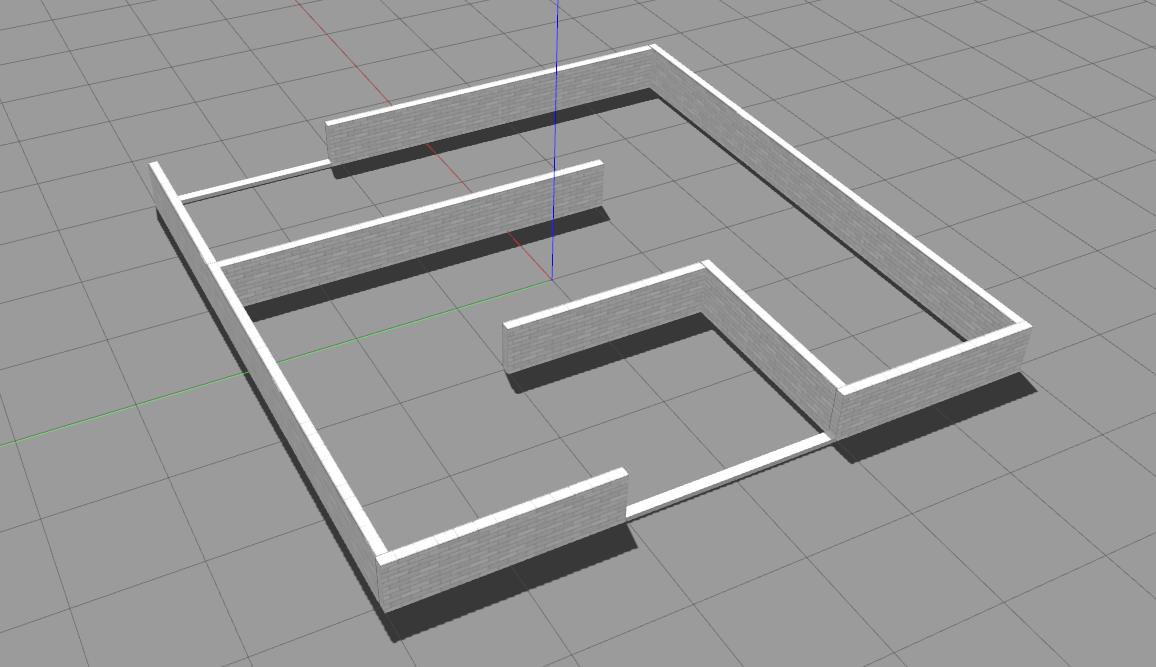

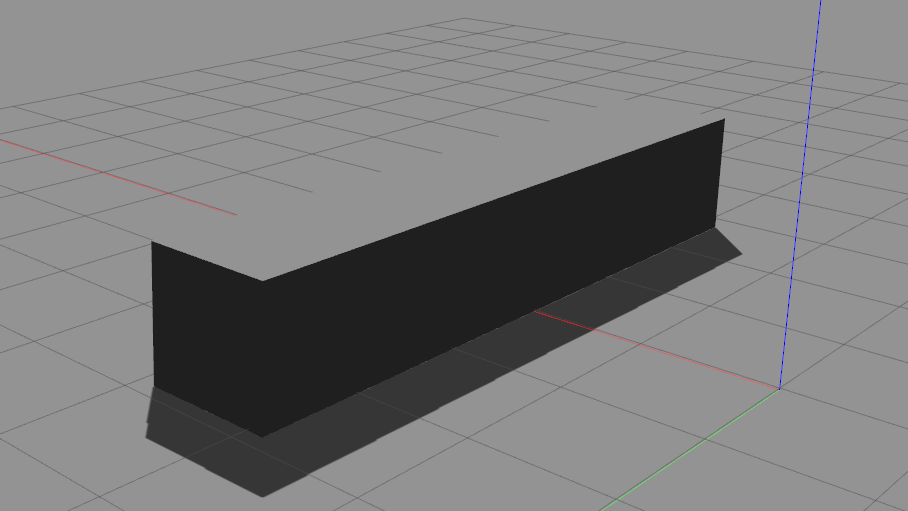

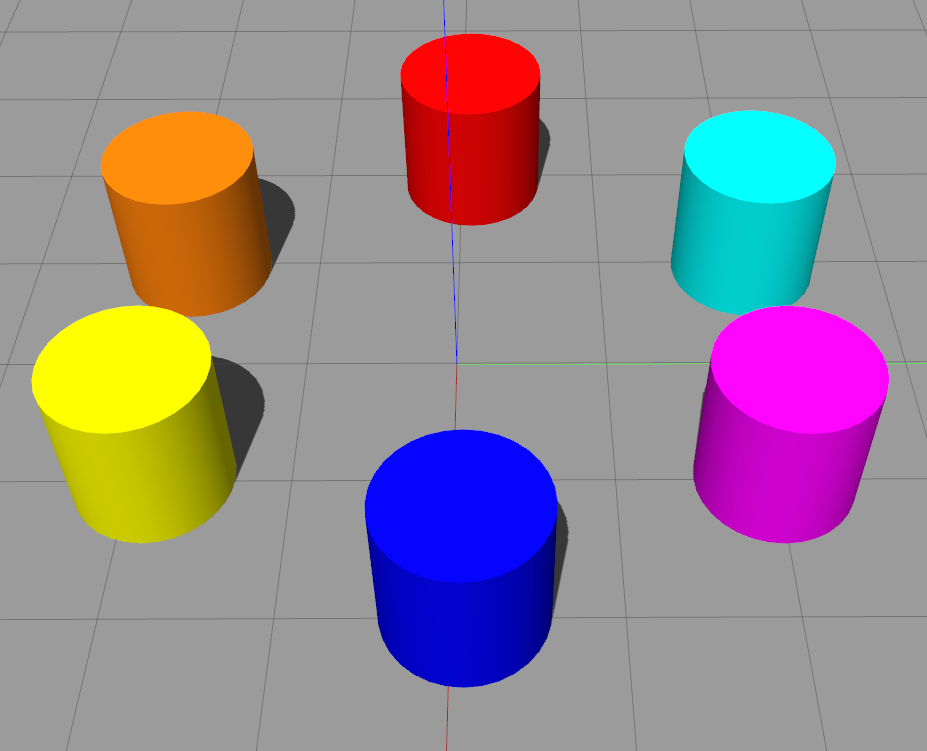

world - chooses which world to use

default: empty.world

options: empty.world, minimaze.world, bangbang.world, between.world, colors.world

x_pos - chooses x coordinate of the world, controls where the robot will spawn, default: 0

For example, the following command will spawn the robot to a world called bangbang.world in position x=2 and the model that will be used is robotont_gazebo_nuc.

roslaunch robotont_gazebo gazebo.launch world:=$(rospack find robotont_gazebo)/worlds/bangbang.world model:=robotont_gazebo_nuc x_pos:=2

Worlds

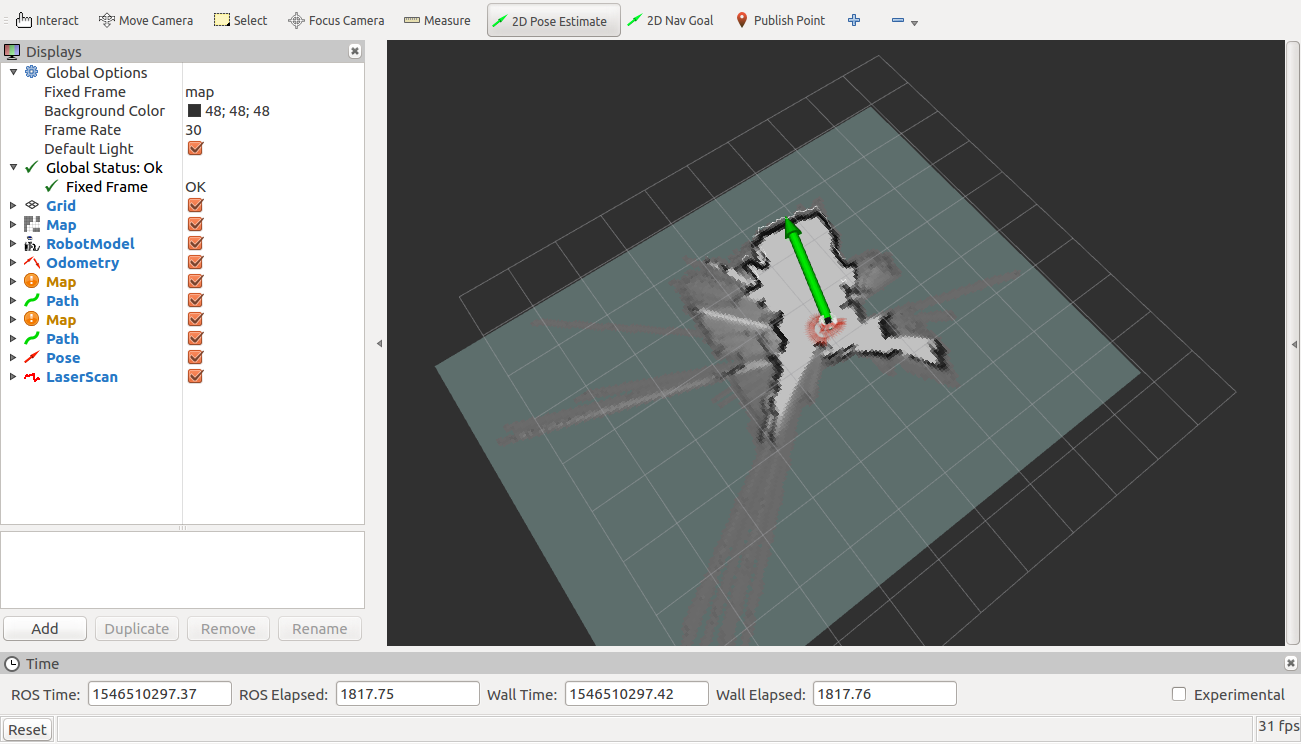

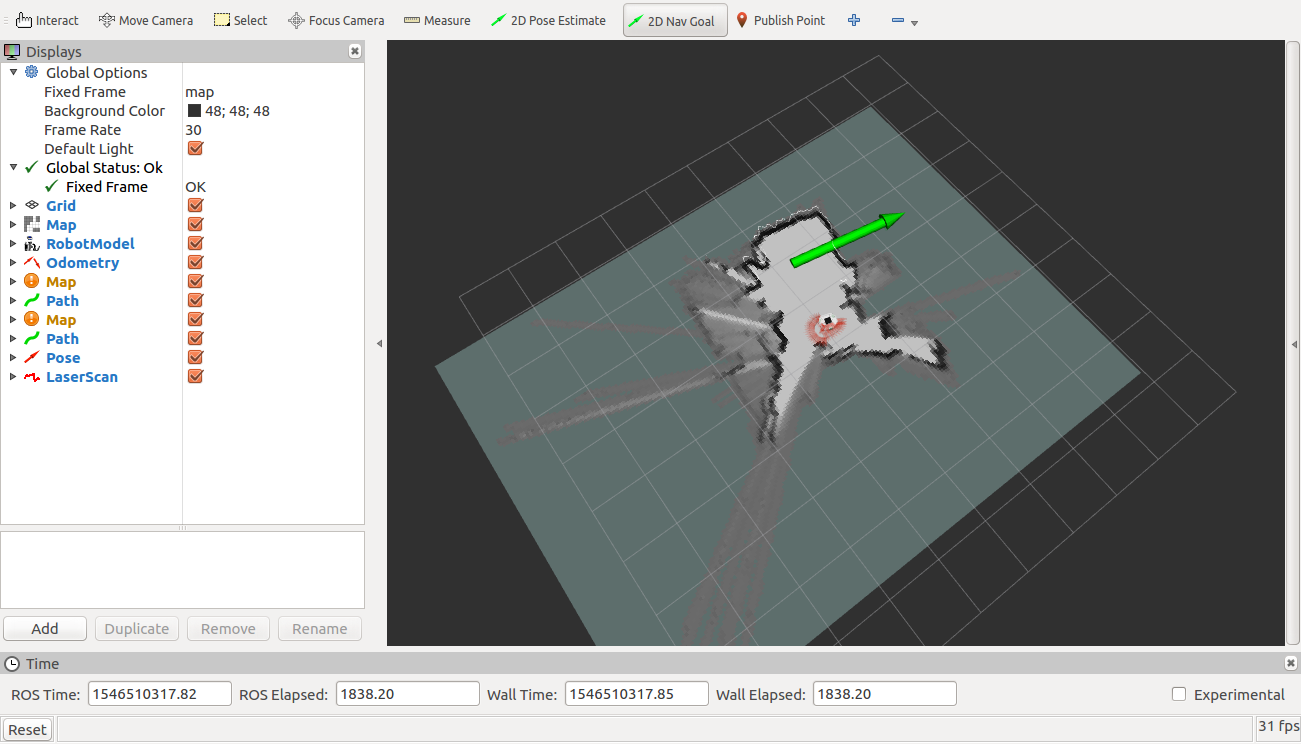

2D Mapping and Localization

Installation

The following packages are needed to run the 2d mapping demo:

sudo apt update sudo apt install ros-noetic-depthimage-to-laserscan sudo apt install ros-noetic-move-base

To run the 2D mapping demo, you need to clone the base package:

git clone https://github.com/robotont-demos/demo_slam.git

and choose a mapping method from the following:

Cartographer

Gmapping

Hector SLAM

Gmapping and AMCL

Installation

You can clone the package for the Gmapping method from this repository.

To clone the packages:

git clone https://github.com/robotont-demos/demo_slam_gmapping.git git clone https://github.com/robotont-demos/demo_teleop_keyboard.git

Running the demo

Launch the simulator

roslaunch robotont_gazebo world_minimaze.launch

Launch teleop keyboard

roslaunch robotont_demos teleop_keyboard.launch

Launch 2d_slam.launch

roslaunch demo_slam_gmapping 2d_slam.launch

Display the map on RViz

roslaunch demo_slam 2d_slam_display.launch

Cartographer

Installation

You can clone the package for the Cartographer method from this repository.

To clone the packages:

git clone https://github.com/robotont-demos/demo_slam_cartographer.git git clone https://github.com/robotont-demos/demo_teleop_keyboard.git

Running the demo

Launch the simulator

roslaunch robotont_gazebo world_minimaze.launch

Launch teleop keyboard

roslaunch demo_teleop teleop_keyboard.launch

Launch 2d_slam.launch

roslaunch demo_slam_cartographer 2d_slam.launch

Display the map on RViz

roslaunch demo_slam 2d_slam_display.launch

Hector SLAM

Installation

You can clone the package for the Hector SLAM method from this repository.

To clone the packages:

git clone https://github.com/robotont-demos/demo_slam_hector.git git clone https://github.com/robotont-demos/demo_teleop_keyboard.git

Running the demo

Launch the simulator

roslaunch robotont_gazebo world_minimaze.launch

Launch teleop keyboard

roslaunch demo_teleop teleop_keyboard.launch

Launch 2d_slam.launch

roslaunch demo_slam_hector 2d_slam.launch

Display the map on RViz

roslaunch demo_slam 2d_slam_display.launch

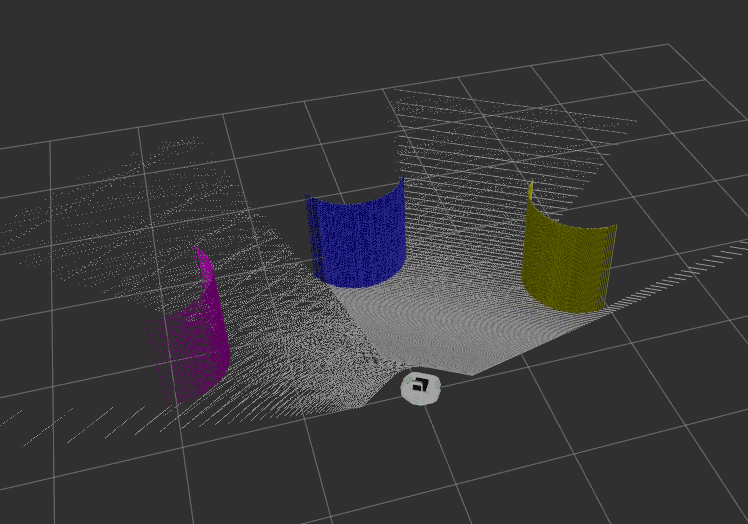

3D mapping

Creates a 3D map of the robot’s surroundings.

Installation

For 3D mapping:

sudo apt install ros-noetic-rtabmap-ros

and clone the following packages:

git clone https://github.com/robotont-demos/demo_mapping_3d.git git clone https://github.com/robotont-demos/demo_teleop_keyboard.git

Running the demo

Launch the simulator

roslaunch robotont_gazebo world_colors.launch

Launch mapping_3d.launch

roslaunch demo_mapping_3d mapping_3d.launch

Launch mapping_3d_display.launch to visualize the result

roslaunch demo_mapping_3d mapping_3d_display.launch

To move the robot open another terminal window and run teleop twist keyboard

rosrun demo_teleop teleop_keyboard.launch

Hint

Notice that the teleop node only receives keypresses when the terminal window is active.

The robot identifies and tracks the pose of the provided AR tag and acts accordingly.

Follow the leader

The follow the leader demo showing the capabilities of the Robotont platform to detect and follow the AR Tag.

Installation

For AR tracking:

git clone https://github.com/machinekoder/ar_track_alvar.git -b noetic-devel git clone https://github.com/robotont-demos/demo_ar_follow_the_leader.git

Running the demo

On the works

AR steering

The AR steering demo showing the capabilities of the Robotont platform to detect and follow the AR Tag.

For AR tracking:

git clone https://github.com/machinekoder/ar_track_alvar.git -b noetic-devel git clone https://github.com/robotont-demos/demo_ar_steering.git

Launch ar_steering.launch (change tag_nr with your AR tag number)

roslaunch demo_ar_steering ar_steering.launch marker_id:=tag_nr

Launch the simulator

roslaunch robotont_gazebo world_colors.launch

AR maze

The gazebo AR maze demo showing the capabilities of the Robotont platform to detect and follow the AR Tag.

For AR tracking:

git clone https://github.com/machinekoder/ar_track_alvar.git -b noetic-devel git clone https://github.com/robotont-demos/demo_ar_maze.git

Launch gazebo_ar_maze.launch

roslaunch demo_ar_maze gazebo_ar_maze.launch

Launch the simulator

roslaunch robotont_gazebo world_minimaze_ar.launch